After a decade of building systems, I’ve found that Docker Compose is the sweet spot for most projects. Not as complex as Kubernetes, but powerful enough to handle real-world applications. Here’s how I use it to manage a typical microservices stack from my home office in Udaipur.

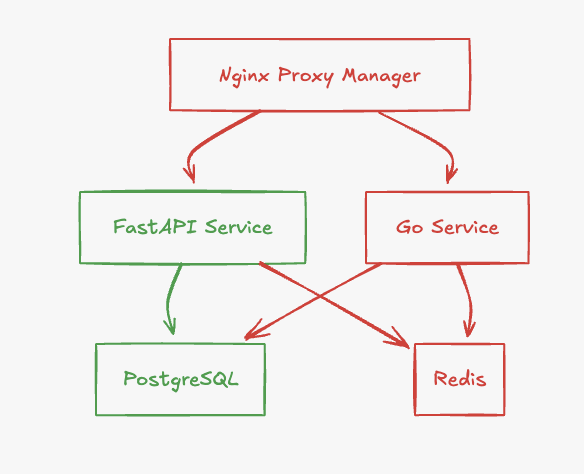

Let’s start with what we’re trying to achieve:

This is a common setup you’ll encounter:

If you’ve tried managing this manually, you know the headaches:

Here’s the complete docker-compose.yml that solves all these problems:

version: '3.8'

services:

nginx-proxy:

image: 'jc21/nginx-proxy-manager:latest'

restart: unless-stopped

ports:

- '80:80'

- '443:443'

- '81:81'

volumes:

- ./data/nginx/data:/data

- ./data/nginx/letsencrypt:/etc/letsencrypt

networks:

- app_network

fastapi:

build: ./fastapi

restart: unless-stopped

environment:

- DATABASE_URL=postgresql://user:password@postgres:5432/mydb

- REDIS_URL=redis://redis:6379/0

depends_on:

postgres:

condition: service_healthy

redis:

condition: service_healthy

networks:

- app_network

goservice:

build: ./goservice

restart: unless-stopped

environment:

- DB_CONNECTION=postgres://user:password@postgres:5432/mydb

- REDIS_ADDR=redis:6379

depends_on:

postgres:

condition: service_healthy

redis:

condition: service_healthy

networks:

- app_network

postgres:

image: postgres:15-alpine

restart: unless-stopped

environment:

- POSTGRES_USER=user

- POSTGRES_PASSWORD=password

- POSTGRES_DB=mydb

volumes:

- postgres_data:/var/lib/postgresql/data

healthcheck:

test: ["CMD-SHELL", "pg_isready -U user -d mydb"]

interval: 10s

timeout: 5s

retries: 5

networks:

- app_network

redis:

image: redis:7-alpine

restart: unless-stopped

volumes:

- redis_data:/data

healthcheck:

test: ["CMD", "redis-cli", "ping"]

interval: 10s

timeout: 5s

retries: 5

networks:

- app_network

volumes:

postgres_data:

redis_data:

networks:

app_network:

driver: bridge

Services can talk to each other using their service names. No more IP hunting:

environment:

- DATABASE_URL=postgresql://user:password@postgres:5432/mydb

- REDIS_URL=redis://redis:6379/0

Make sure services are actually ready, not just running:

healthcheck:

test: ["CMD-SHELL", "pg_isready -U user -d mydb"]

interval: 10s

timeout: 5s

retries: 5

Keep your data safe across restarts:

volumes:

postgres_data:

redis_data:

Services on the same network can talk to each other, others can’t:

networks:

app_network:

driver: bridge

# Pull latest images and start services

docker-compose pull

docker-compose up -d

# View running services

docker-compose ps

# Check logs

docker-compose logs -f service_name

# Backup database

docker-compose exec postgres pg_dump -U user mydb > backup.sql

# Update services

docker-compose pull

docker-compose up -d --remove-orphans

Add Portainer for a nice web UI to manage everything:

portainer:

image: portainer/portainer-ce

restart: unless-stopped

ports:

- "9000:9000"

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- portainer_data:/data

Use Environment Files

# .env

POSTGRES_PASSWORD=your_secure_password

REDIS_PASSWORD=another_secure_password

Resource Limits

services:

fastapi:

deploy:

resources:

limits:

cpus: '0.50'

memory: 512M

Logging Configuration

services:

fastapi:

logging:

driver: "json-file"

options:

max-size: "10m"

max-file: "3"

Docker Compose hits the sweet spot for most projects. It’s simple enough to understand quickly but powerful enough to run production workloads. Start here, and only move to Kubernetes when you actually need its features.

P.S.: Running this setup in production? Remember to secure your services, set resource limits, and configure proper logging. Feel free to reach out if you need help!